Nadja Schinkel-Bielefeld

Principal Scientist, Germany

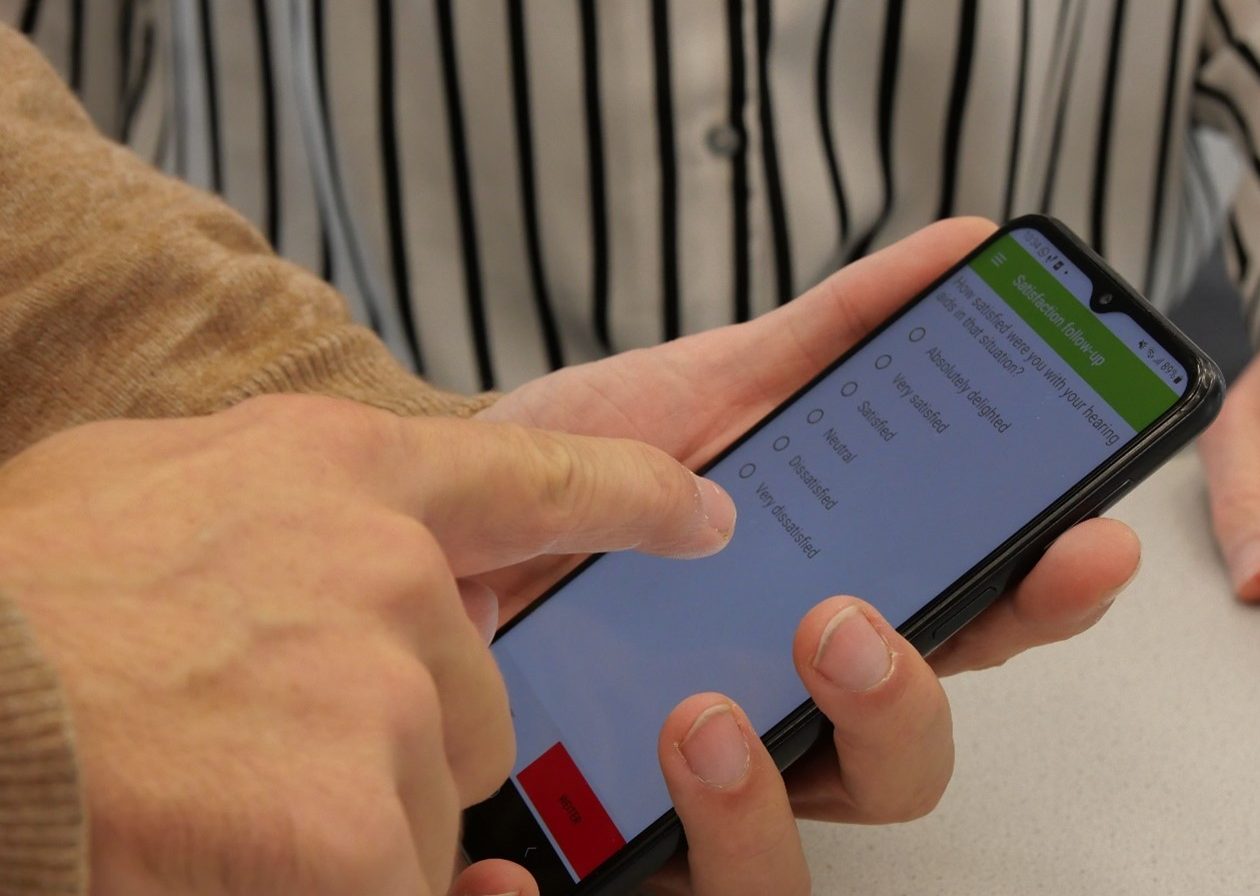

Recently Ecological Momentary Assessment (EMA) has become popular in audiology for capturing real-world hearing aid performance and user experiences. However, challenges remain, including rating biases, behavioral adaptations, careless responses, and study design limitations.

These issues raise concerns about data validity, participant burden, and the ability to detect meaningful differences between hearing aid programs.

By collecting surveys in everyday environments, EMA minimizes recall bias and, together with objective measures of the environment and hearing aid algorithms, provides richer insights than retrospective self-reports.

Our research integrates findings from multiple studies to explore how EMA can be refined to improve accuracy and reliability and assess real-world hearing aid use through EMA, focusing on:

Over the years, we have collaborated with researchers and universities (e.g. Jade Hochschule, Technische Hochschule Lübeck, University of Southern Denmark) across multiple studies. Also, the contributions from the EMA Methods in Audiology Working Group have helped refine methodologies for more accurate real-world hearing aid assessments.

Participants provided self-reports on speech understanding, sound quality, and listening effort, which were analyzed alongside objective hearing aid data.

Key methodological aspects examined:

The following highlights our findings on several factors affecting EMA reliability.

For a deeper dive into the research behind these findings, explore our research papers in the library section below.

Future research to improve EMA will focus on refining rating scales to prevent ceiling effects and enhance response sensitivity.

It will also explore dyadic EMA to capture input from conversation partners and incorporate short-term retrospectives to assess situations where participants cannot respond immediately.

Additionally, insights from EMA will be applied to optimize hearing aid fitting in clinical practice.

Refining EMA methodologies will lead to more accurate and personalized hearing aid recommendations, benefiting both researchers and clinicians.

These improvements will help:

By refining EMA methodology, EMA can become an indispensable tool for designing and optimizing hearing aid technology—ultimately improving the quality of life for users worldwide.